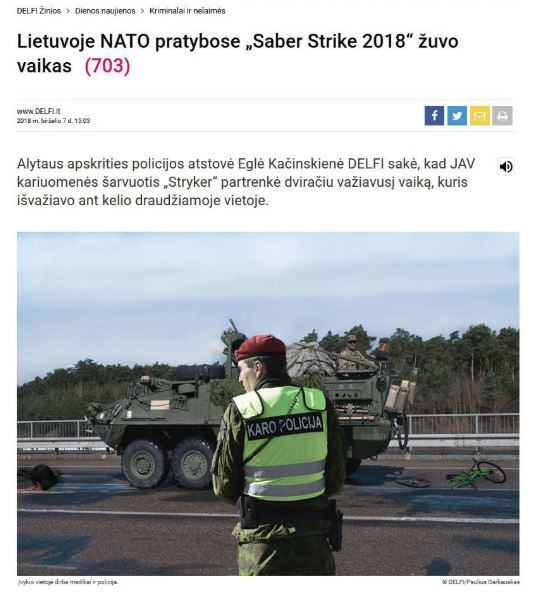

On June 7, during a training exercise in the Baltics, four U.S. Army Stryker vehicles driving along a road between Kaunas and Prienai, Lithuania, collided when the lead vehicle braked too hard for an obstacle on the roadway. Not long after the incident, a blog post made to look like a popular Lithuanian news outlet claimed the Americans had killed a local child in the collision.

A doctored image was posted showing unconcerned soldiers near a crushed bicycle and child’s corpse.

“This is a very typical example of the hostile information, and proves we are already being watched,” Lithuanian Defense Minister Raimundas Karoblis said of the fabricated event during a June 8 meeting with NATO officials. “We have no doubt that this was a deliberate and coordinated attempt aiming to raise general society’s condemnation to our allies, as well as discredit the exercises and our joint efforts on defense strengthening.”

In this case, the phony image and news article were quickly refuted, but what happens when it’s not so easy to tell truth from fiction?

The ability to distort reality is expected to reach new heights with the development of so-called “deep fake" technology: manufactured audio recordings and video footage that could fool even digital forensic experts.

“I would say 99 percent of the American population doesn’t know what it is, even though for years they’ve been watching deep fakes in science fiction movies and the like, in which special effects are as realistic as they’ve ever been,” Sen. Marco Rubio, R-Fla., said Thursday before a technology panel at the Heritage Foundation. “But never before have we seen that capability become so available right off the shelf.”

The emerging technology could be used to generate Kompromat — short for compromising material in Russian — that portrays an individual in deeply embarrassing situations, making them ripe for blackmail by a foreign intelligence service. Or, just as likely, deep fake technology could be used to generate falsified recordings from meetings that actually did take place, but where the content discussed is manipulated.

Perhaps the only audio from a closed-door meeting could be doctored to make a senior U.S. official appear as though they told their hypothetical Russian counterpart “don’t worry about the Baltics, we won’t lift a finger to defend them,” said Bobby Chesney, an associate dean at the University of Texas School of Law who studies the impact of this emerging capability.

The geopolitical fallout from such a declaration would be hard to overcome.

National-level intelligence agencies and even insurgencies already fabricate crimes by other countries' military forces, Chesney said. Deep fakes could add to these existing disinformation campaigns.

“Often it’s a claim about killing civilians or harm to civilian populations,” he said. "And yeah, you can have actors play the role and impersonate, but how much the better if you can use the technology of deep fakes to make more credible instances of supposed atrocities?”

Russian intelligence has long been known for its willingness to blackmail and discredit foreign officials. In 2009, a U.S. diplomat working on human rights issues in Russia was depicted in a grainy video purchasing a prostitute. Only it wasn’t him. The video spliced actual footage of the American making phone calls with fake footage of him in the compromising situation, according to the U.S. State Department.

This sort of propaganda also existed during the Cold War, but the speed at which information travels and the accessibility of the software involved is growing.

“One of the ironies of the 21st century is that technology has made it cheaper than ever to be bad,” Rubio said. “In the old days, if you wanted to threaten the United States, you needed 10 aircraft carriers, nuclear weapons and long-range missiles. ... Increasingly, all you need is the ability to produce a very realistic fake video.”

Fake videos used to be difficult to produce. They required an army of visual effects artists and complicated computers, but that changed recently.

Several universities and other entities began to draw attention to deep fakes after they published “puppeteering systems,” said Chris Bregler, a senior staff scientist at Google’s artificial intelligence division.

“That means you take lots of video of somebody and then use machine-learning to change the lips or some other parts of the face, and it looks like someone said something entirely different,” he explained.

The word “deep” in deep fakes basically means a deeper, more layered neural network, which assists in developing more realistic images. The democratization of information has made this technology all the more accessible. Last year, someone posted computer code on Reddit — an aggregation and message board website — that allows a user to create deep fake programs.

“If you have some software engineering skills, you can download that code, turn it into an application, collect a bunch of examples of faces of a person who is there in a video and faces of the person you want to replace, and then you buy a graphics card that costs less than $1,000," Bregler said. “You let your system run on your home computer or laptop for sometimes several days, or one day, and then it creates a deep fake.”

The best counter to deep fakes appears to be awareness. Technology to spot phony recordings is in lockstep with the development of deep fake software itself, Bregler said.

But in an age of mass communication and instant information, there is a concern that the truth will come too late.

“It’s true we can eventually debunk, but the truth doesn’t ever quite catch up with the initial lie, if the initial lie is emotional and juicy enough,” Chesney said.

Kyle Rempfer was an editor and reporter who has covered combat operations, criminal cases, foreign military assistance and training accidents. Before entering journalism, Kyle served in U.S. Air Force Special Tactics and deployed in 2014 to Paktika Province, Afghanistan, and Baghdad, Iraq.